What’s the good news? It is effective. What’s the bad news? It requires a large number of qubits.

Google unveiled today a demonstration of quantum error correction on their Sycamore quantum processors. The iteration on Sycamore isn’t dramatic—it has the same number of qubits as before but performs better. And getting quantum error correction isn’t really the news—they’d managed to get it to function a couple of years ago.

Instead, the indicators of growth are more subtle. Qubits were error-prone enough in previous generations of processors that adding more of them to an error-correction scheme generated issues that were larger than the gain in repairs. It is conceivable to add more qubits and reduce the error rate in this current iteration.

It is something we can work on.

A qubit is the functional unit of a quantum processor, and it can be anything—an atom, an electron, or a hunk of superconducting electronics—that can store and control a quantum state. The greater the number of qubits, the more capable the machine is. By the time you have access to several hundred, it’s thought that you can perform calculations that would be difficult to impossible to complete on regular computer hardware.

That is, provided that all of the qubits behave properly. Which they, in general, do not. As a result, throwing additional qubits at a problem increases the likelihood of an error occurring before a calculation can be completed. Therefore, we now have quantum computers with over 400 qubits, but any calculation requiring all 400 would fail.

The solution to this challenge is widely regarded to be the creation of an error-corrected logical qubit. This procedure entails distributing a quantum state among a group of connected qubits. (All of these hardware qubits can be handled as a single unit in terms of computing logic, hence the term “logical qubit.”) Additional qubits adjacent to each component of the logical qubit enable error correction. They can be measured in order to determine the state of each logical qubit.

Now, if one of the hardware qubits that makes up the logical qubit has an error, the fact that it only holds a portion of the logical qubit’s information implies that the quantum state isn’t ruined. And measuring its neighbors will expose the fault, allowing some quantum physics to correct it.

The greater the number of hardware qubits dedicated to a logical qubit, the more resilient it should be. Right now, there are only two issues. One reason is that we don’t have any spare hardware qubits. Implementing a strong error correction strategy on the processors with the greatest qubit counts would result in a calculation utilizing fewer than 10 qubits. The second difficulty is that the hardware qubit error rates are too high for any of this to operate. Adding existing qubits to a logical qubit does not make it more robust; it increases the likelihood of having too many faults at once that cannot be addressed.

The same, but not the same

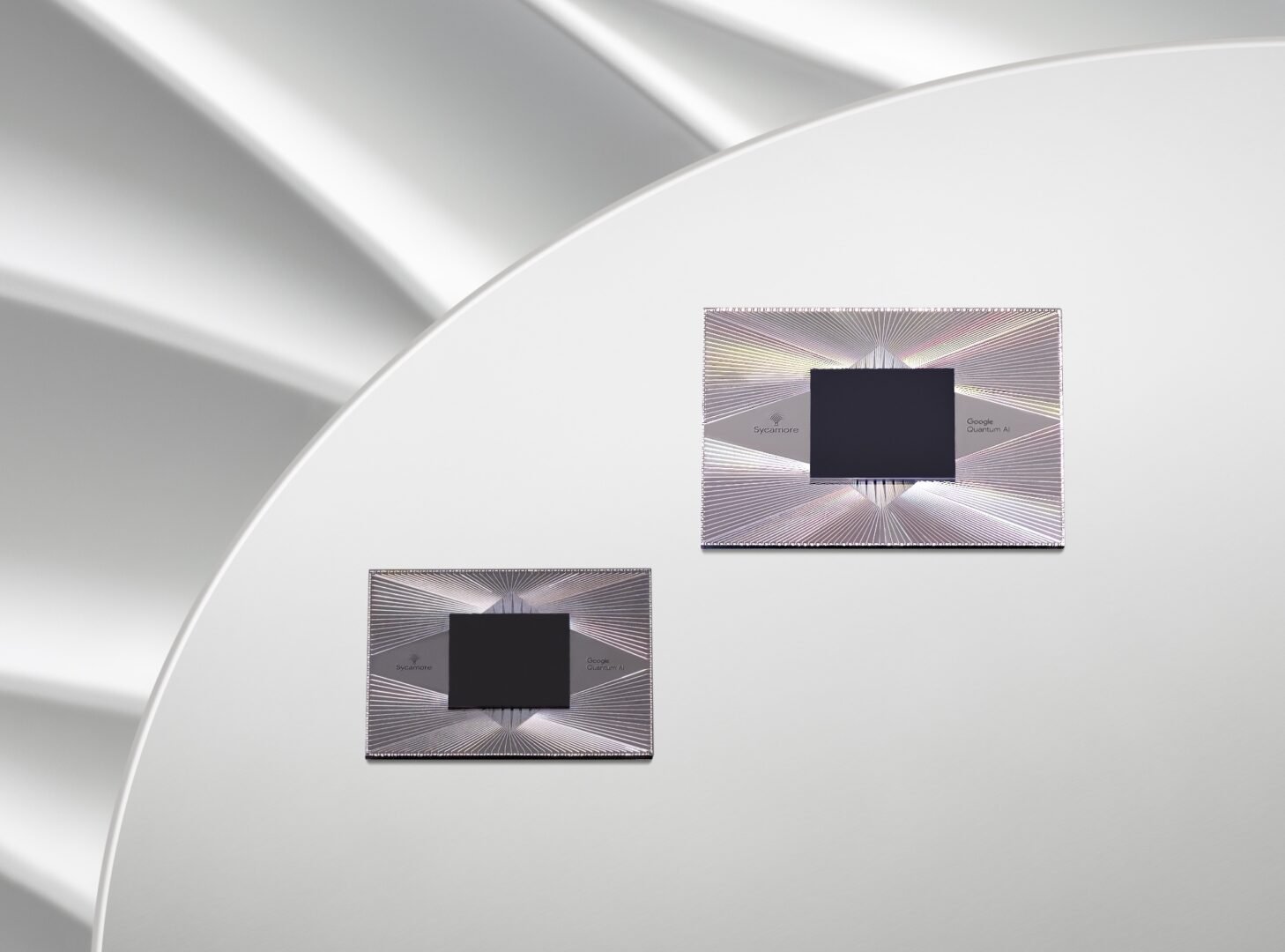

In response to these challenges, Google created a new iteration of its Sycamore processor with the same amount and arrangement of hardware qubits as the previous one. Nonetheless, the company concentrated on lowering the error rate of individual qubits so that it could perform more complex operations without failure. Google utilized this hardware to test error-corrected logical qubits.

The study describes tests of two alternative approaches. The data was stored on a square grid of qubits in both cases. Each of these had adjacent qubits that were measured in order to implement error correction. The only variation was the grid size. It was three qubits by three qubits in one way and five by five in the other. The former required a total of 17 hardware qubits, while the latter required 49, or nearly three times as many.

The research team measured performance in a variety of ways. Yet the main question was straightforward: which logical qubits had the lowest error rate? If hardware qubit errors were dominant, you’d anticipate triple the amount of hardware qubits to raise the error rate. Yet, if Google’s performance modifications sufficiently enhance hardware qubits, the larger, more robust arrangement should reduce the error rate.

The broader scheme prevailed, although it was a tight call. Overall, the larger logical qubit had an error rate of 2.914 percent, whereas the smaller one had a rate of 3.028 percent. That isn’t much of an edge, but it is the first time such an advantage has been established. Also, either error rate is too high to use one of these logical qubits in a sophisticated calculation. Google predicts that the performance of hardware qubits would need to improve by another 20% or more to provide huge logical qubits a clear advantage.

Google claims in an accompanying press release that it will reach that point—running a single, long-lived logical qubit—in “2025-plus.” At that point, it will confront many of the same issues that IBM is now addressing: Because there are only so many hardware qubits that can fit on a chip, some method of networking a large number of chips into a single compute unit must devised. Google declined to specify a time frame for testing solutions there. (IBM says it will experiment with several ways this year and next.)

To be clear, a 0.11 percent boost in error correction that takes approximately half of Google’s processor to house a single qubit is not a computational advance. We’re no closer to cracking encryption than we were the day before. But it does demonstrate that we’ve already arrived at a point where our qubits are good enough to avoid making things worse—and that we’ve arrived there long before folks ran out of ideas on how to improve hardware qubit performance. That indicates we’re getting closer to the point where the technological challenges we face have less to do with the qubit technology.